Facebook Enhances its Features to Help Prevent Suicide and Self-Harm

Undoubtedly social media has become a major source of revolution in this day and age, alongside a powerful medium for transmitting information. Understanding its responsibility as well as its reach, social media giant Facebook has come forward with a few new features to help prevent suicide and self-harm that has grown at an alarming rate in the last few years.

On World Suicide Prevention Day Facebook has unveiled a set of guidelines, the social media platform has brought in to ensure that content related to self-injury, suicide and self-harm is limited through Facebook tools and that individuals who are vulnerable to such ideas can be kept away from harmful information.

“We tightened our policy around self-harm to no longer allow graphic cutting images to avoid unintentionally promoting or triggering self-harm, even when someone is seeking support or expressing themselves to aid their recovery. On Instagram, we’ve also made it harder to search for this type of content and kept it from being recommended in Explore,” said Facebook in its official statement.

The statement further read, “We’ve also taken steps to address the complex issue of eating disorder content on our apps by tightening our policy to prohibit additional content that may promote eating disorders. And with these stricter policies, we’ll continue to send resources to people who post content promoting eating disorders or self-harm, even if we take the content down.”

“Lastly, we chose to display a sensitivity screen over healed self-harm cuts to help avoid unintentionally promoting self-harm,” concluded the social media giant.

All these implementations have come into place after Facebook’s year long consolation with health and well-being experts on how to appropriately handle suicide notes, the risks of sad content online and newsworthy depictions of suicide.

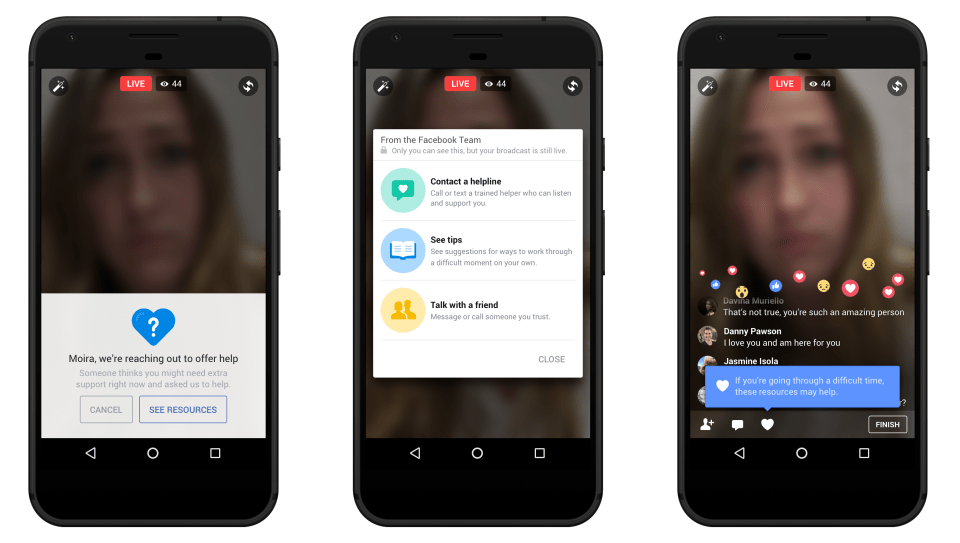

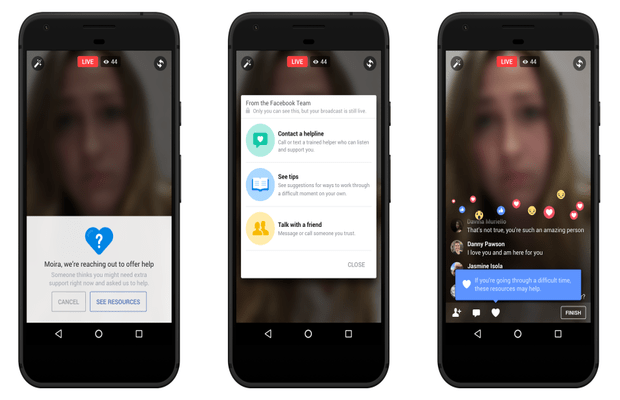

As a result of these consultations an additional feature of ‘Suicide Prevention’ has been added in Facebook’s Safety Center tab. Alongside, the social media platform would be hiring a health and well-being expert on its team.

“This person will focus exclusively on the health and well-being impacts of our apps and policies, and will explore new ways to improve support for our community, including on topics related to suicide and self-injury,” informed Facebook.

In addition to consultations, debates, and introduction of new features Facebook has also tightened its content-sharing policies and has effectively scrutinized potentially harmful content.

From April to June of 2019, Facebook took action on more than 1.5 million pieces of suicide and self-injury content on Facebook and found more than 95% of it before it was reported by a user. Similarly, the social media giant took action on more than 800,000 pieces of [similar] content on Instagram during the same time period.